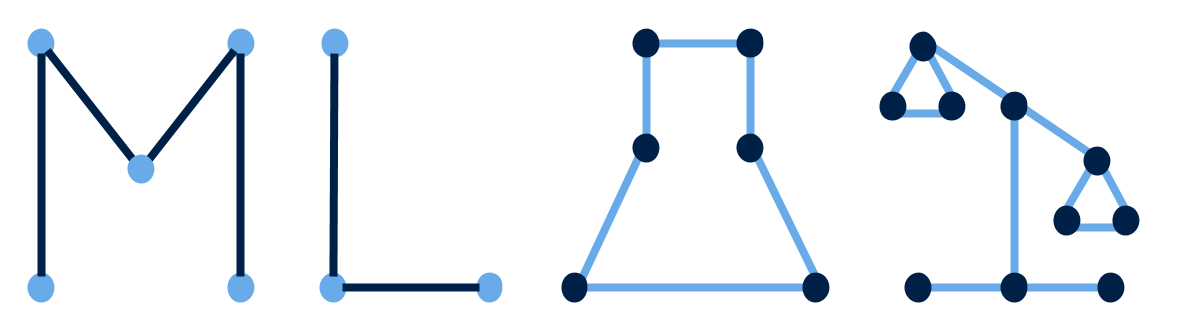

Class 3: ML Pipelines#

Goals when using ML#

Understand about the data (data science/ actual science) probability more statistics, maybe fit another examine model parameters, inspect them

understanding about Naive bayes fit different data varies

claims about the learning algorithm run multiple algorithms on the same data possibly multiple data

Basic setup#

test train

training parameters

estimator objects

fit model parameters

metrics

cross validation

import pandas as pd

import seaborn as sns

import numpy as np

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import confusion_matrix, classification_report

from sklearn import datasets

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 4

2 import seaborn as sns

3 import numpy as np

----> 4 from sklearn.model_selection import cross_val_score

5 from sklearn.model_selection import train_test_split

6 from sklearn.naive_bayes import GaussianNB

ModuleNotFoundError: No module named 'sklearn'

iris_df = sns.load_dataset('iris')

sns.pairplot(iris_df, hue='species')

X,y = datasets.load_iris(return_X_y=True)

X.shape

y.shape

X_train, X_test, y_train, y_test = train_test_split(X,y,)

gnb = GaussianNB()

gnb.__dict__

gnb.fit(X_train,y_train)

gnb.__dict__

X_test[0]

y_pred = gnb.predict(X_test)

y_pred[:5]

y_test[:5]

confusion_matrix(y_test, y_pred)

gnb.score(X_test,y_test)

gnb2 = GaussianNB(priors=[.5,.25,.25])

gnb2_cv_scores = cross_val_score(gnb2,X_train,y_train)

np.mean(gnb2_cv_scores)

gnb_cv_scores = cross_val_score(gnb,X_train,y_train)

np.mean(gnb_cv_scores)

print(classification_report(y_test,y_pred))

gnb.predict_proba(X_test)