MLSS#

2022-02-09#

Lead Scribe: Chamudi Kashmila

Paper - Graphical Models for Inference with Missing Data#

paper – Presented by Chamudi#

Graphical Models for Inference with Missing Data#

Missing data can have several hamrful consequences.#

Firstly they can significantly bias the outcome of research studies.

This is mainly because the response profiles of non-respondents and respondents can be significantly different from each other. Hence ignoring the former distorts the true proportion in the population.

Secondly, performing the analysis using only complete cases and ignoring the cases with missing values can reduce the sample size there by substaintially reducing estimation efficiency.

Lastly, many of the algorithms and statsitical techniques are generally tailored to draw inferences from complete datasets.

it may be difficult or even inappropriate to apply these algorithms and statistical techniques on incomplete datasets.

Existing Methods for Handling Missing Data#

Listwise deletion (LD) and pairwise deletion (PD) are used in approximately 96% of studies in the social and behavioral sciences

Expectation-maximization (EM) [example: K-Means] algorithm is a general technique for finding max likelihood (ML) estimates from incomplete data.

ML is often used in conjunction with imputation methods

Mean Sub, Hot-deck imputation, cold-deck imputation, and Multiple Imputation (MI).

This paper aims to iluminate missing dat aproblems using casual graphs

The objectives are:

Given a target relation Q to be estimated and a set of assumptions about the missingess process encoded in a graphical model, (i) under what conditions does a consistent estimate exist, and (ii) how can we product it from the data available. These questions are answered with the aid of

Missingness Graphs (m-graphs)Review the traditional taxonomy of missing data problems and cast it in graphical terms.

define the

notion of recoverability- the existence of a consistent estimate - and present graphical conditions for detecting recoverability of a given probalistic query Q.

Graphical Representation of the Missingness Process#

Graphical Models have been used to analyze missing missing information in the form of missing cases

The need exists for a general approach capable of modeling an arbitrary data-generating process and deciding whether how missingness can be outmaneuvered in every dataset generated by that process.

Such a general approach should allow each variable to be governed by its own missingness mechanism, and each mechanism to be triggered by other partially observed variables in the model.

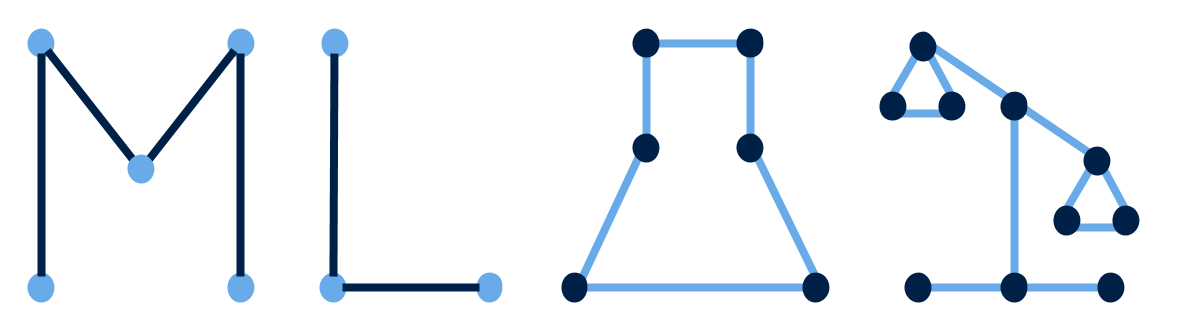

To achieve this flexibility we use a graphical model called

missingness graphwhich is aDAG (Directed Acyclic Graph)defined as follows.

Missingness Graphs#

Let G(𝕍, E) be the causal DAG where 𝕍 = V ∪ U ∪ V* ∪ R

Let G(𝕍, E) be the causal DAG where 𝕍 = V ∪ U ∪ V* ∪ R

V is the set of observable nodes. Nodes in the graph correspond to variables in the data set. U is the set of unobserved nodes E is the set of edges in the DAG V* is a set of all proxy variables ℝ is the set of all causal mechanisms that are responsible for missingness

Oftentimes we use bi-directed edges as a shorthand notation to denote the existence of a U variable as common parent of two variables in Vo ∪ Vm ∪ ℝ.

V is partitioned into Vo and Vm such that

Vo ⊆ V is the set of variables that are observed in all records in the population

Vm ⊆ V is the set of variables that are missing in at least one record.

Variable X is termed as fully observed if X∈Vo and partially observed if X∈Vm.

This graphical representation briefly shows both the causal relationships among variables in V and the process that accounts for missingness in some of the variables.

Since every d-separation in the graph implies conditional independence in the distribution, the m-graph provides an effective way of representing the statistical properties of the missingness process and, hence, the potential of recovering the statistics of variables in Vm from partially missing data.

Taxonomy of Missingness Mechanisms#

It is common to classify missing data mechanisms into three types

Missing Completely At Random (MCAR) : Data are MCAR if the probability that Vm is missing is independent of Vm or any other variable in the study, as would be the case when

respondents decide to reveal their income levels based on coin-flips.Missing At Random (MAR) : Data are MAR if for all data cases Y , P(R|Yobs, Ymis) = P(R|Yobs) where Yobs denotes the observed component of Y and Ymis, the missing component. Example:

Women in the population are more likely to not reveal their age.Missing Not At Random (MNAR) or “non-ignorable missing”: Data that are neither MAR nor MCAR are termed as MNAR. Example:

Online shoppers rate an item with a high probability either if they love the item or if they dislike it. In other words, the probability that a shopper supplies a rating is dependent on the shopper’s underlying liking.

In the graph-based interpretation used in this paper, MCAR is defined as total independence between ℝ and Vo ∪ Vm ∪ U i.e. ℝ ∐ (Vo ∪ Vm ∪ U), as shown in Figure

a.MAR is defined as independence between ℝ and Vm ∪ U given Vo i.e. ℝ ∐ Vm ∪ U|Vo, as shown in Figure

b.Finally if neither of these conditions hold, data are termed MNAR, as shown in Figure

candd.

Recoverability#

Recoverability is a measurement to see if we can get P(X, Y) from the entire dataset D. Examine the conditions under which a bias-free estimate of a given probabilistic relation Q can be computed.

Definition (Recoverability). Given a m-graph G, and a target relation Q defined on the variables in V , Q is said to be recoverable in G if there exists an algorithm that produces a consistent estimate of Q for every dataset D such that P(D) is (1) compatible with G and (2) strictly positive over complete cases i.e. P(Vo, Vm, ℝ = 0) > 0.

Recoverability when data are MCAR#

For MCAR data we have ℝ ∐ (Vo ∪ Vm). Therefore, we can write P(V ) = P(V | ℝ) = P(Vo, V* |ℝ = 0).

Since both ℝ and V* are observables, the joint probability P(V) is consistently estimable (recoverable) by considering complete cases only

Example : Let X be the treatment and Y be the outcome as depicted in the m-graph in Fig. 1 (a). Let it be the case that we accidentally deleted the values of Y for a handful of samples, hence Y ∈ Vm. Can we recover P(X, Y )?

From D, we can compute P(X, Y , Ry).

From the m-graph G, we know that Y is a collider and hence by d-separation,

(X ∪ Y ) ∐ Ry. Thus P(X, Y ) = P(X, Y |Ry).

In particular, P(X, Y ) = P(X, Y |Ry = 0).

When Ry = 0, by eq. (1), Y = Y* .

Hence, P(X, Y ) = P(X, Y* |Ry = 0) (2)

The RHS of Eq. 2 is consistently estimable from D;

hence P(X, Y ) is recoverable.

Recoverability when data are MAR#

When data are MAR, we have R ∐ Vm|Vo. Therefore P(V ) = P(Vm|Vo)P(Vo) = P(Vm|Vo, R = 0)P(Vo). Hence the joint distribution P(V) is recoverable.

Example : Let X be the treatment and Y be the outcome as shown in the m-graph in Fig. 1 (b). Let it be the case that some patients who underwent treatment are not likely to report the outcome, hence the arrow X → Ry. Under the circumstances, can we recover P(X, Y )?

From D, we can compute P(X, Y , Ry).

From the m-graph G, we see that Y is a collider and X is a fork.

Hence by d-separation, Y ∐ Ry|X.

Thus P(X, Y ) = P(Y |X)P(X) = P(Y |X, Ry)P(X).

In particular, P(X, Y ) = P(Y |X, Ry = 0)P(X).

When Ry = 0, by eq. (1), Y* = Y.

Hence, P(X, Y ) = P(Y* |X, Ry = 0)P(X) (3)

and since X is fully observable, P(X, Y ) is recoverable

Recoverability when data are MNAR#

Data that are neither MAR nor MCAR are termed MNAR. Though it is generally believed that relations in MNAR datasets are not recoverable, the following example demonstrates otherwise.

Example : Fig. 1 (d) depicts a study where (i) some units who underwent treatment (X = 1) did not report the outcome (Y ) and (ii) we accidentally deleted the values of treatment for a handful of cases. Thus we have missing values for both X and Y which renders the dataset MNAR. We shall show that P(X, Y ) is recoverable.

From D, we can compute P(X*, Y*, Rx, Ry). From the m-graph G, we see that X ∐ Rx and Y ∐ (Rx ∪ Ry)|X.

Thus P(X, Y ) = P(Y |X)P(X) = P(Y |X, Ry = 0, Rx = 0)P(X|Rx = 0). When Ry = 0 and Rx = 0 we have (by Equation (1) ), Y = Y* and X = X*.

Hence, P(X,Y) = P(Y* |X* , Rx = 0 , Ry = 0)P(X* |Rx = 0) (4)

Therefore, P(X,Y) is recoverable

Conditions for Recoverability#

How can we determine if a given relation is recoverable?

Theorem 1: A query Q defined over variables in V0

Theorem 1 provides a sufficient condition for recoverability

Theorem 1

A query Q defined over variables in Vo ∪ Vm is recoverable if it is decomposable into terms of the form Qj = P(Sj |Tj ) such that Tj contains the missingness mechanism Rv = 0 of every partially observed variable V that appears in

Proof:

If such a decomposition exists, every Qj is estimable from the data, hence the entire expression for Q is recoverable.

Example : Consider the problem of recovering Q = P(X, Y ) from the m-graph of Fig. 3(b).

Attempts to decompose Q by the chain rule, as was done in Eqs. (3)

P(X, Y ) = P(Y* |X, Ry = 0)P(X) (3)

and (4) would not satisfy the conditions of Theorem 1.

To witness we write P(X, Y ) = P(Y |X)P(X) and note that the graph does not permit us to augment any of the two terms with the necessary Rx or Ry terms;

X is independent of Rx only if we condition on Y , which is partially observed, and Y is independent of Ry only if we condition on X which is also partially observed.

This deadlock can be disentangled however using a non-conventional decomposition:

where the denominator was obtained using the independencies Rx ∐ (X, Ry)|Y and Ry ∐ (Y, Rx)|X shown in the graph.

The final expression above satisfies Theorem 1 and renders P(X,Y) recoverable.

This example again shows that recovery is feasible even when data are MNAR.

Theorem 2 operationalizes the decomposability requirement of Theorem 1.

Theorem 2

(Recoverability of the Joint P(V)).Given a m-graph G with no edges between the R variables and no latent variables as parents of R variables, a necessary and sufficient condition for recovering the joint distribution P(V) is that no variable X be a parent of its missingness mechanism Rx. Moreover, when recoverable, P(V) is given by

Theorem 3 gives a sufficient condition for recovering the joint distribution in a Markovian model.

Theorem 3

Given a m-graph with no latent variables (i.e., Markovian) the joint distribution P(V) is recoverable if no missingness mechanism Rx is a descendant of its corresponding variable X. Moreover, if recoverable, then P(V) is given by

Theorem 4

A sufficient condition for recoverability of a relation Q is that Q be decomposable into an ordered factorization, or a sum of such factorizations, such that every factor Qi = P(Yi |Xi) satisfies Yi ∐ (Ryi , Rxi )|Xi . A factorization that satisfies this condition will be called admissible.

Theorem 4 will allow us to confirm recoverability of certain queries Q in models such as those in Figures a, c, d which do not satisfy the requirement in Theorem 2

P(X|Y ) = P(X|Rx = 0, Ry = 0, Y) is recoverable

P(X, Y, Z) = P(Z|X, Y, Rz = 0, Rx = 0, Ry = 0) P(X|Y, Rx = 0, Ry = 0) P(Y |Ry = 0) is recoverable

P(X, Z) = P(X, Z|Rx = 0, Rz = 0) is recoverable

Conclusion#

Causal graphical models depicting the data generating process can serve as a powerful tool for analyzing missing data problemsFormalized the notion of recoverability and showed that relations are always recoverable when data are missing at random (MCAR or MAR) and even when data are missing not at random (MNAR).presented a sufficient condition to ensure recoverability of a given relation Q (Theorem 1) and operationalized Theorem 1 using graphical criteria (Theorems 2, 3 and 4).