2022-02-23#

Lead Scribe : Derek

Fairness and Causality/Experiments#

Causality#

Presented by Surbhi

Main Goal

Teach more how causality can help us understand a situation

Passive observation

Something we generally observe but it does not require proper attention

Like noticing a car driving by you

Data collected this way shows a snapshot of the world as it is

Causal reasoning

Important questions are not usually observational in nature

Would traffic fatalities decrease if we raised the legal driving age by 2 years?

Not every cause qeustion is easy to address

Causal inference gives a formal language to ask questions, but does not trivialize finding the answer

Supporting 3 purposes for understanding causality

Conceptualize and address limits of observational techniques

Provide tools to help design intervention that acheive a desired effect

Engage with important debate about when and how much reasoning about discrimination and fairness require causal understanding

Simpson’s Paradox

Higher acceptance ratio among women, while two show a higher acceptance for men

There’s variation between departments, and so the higher rate for men may be seen in aggregate but reversed at the department level

This is one example of us misinterpreting what conditional probabilities encode

If we look at overall data, it looks like admissions are unfair and discriminating for men, but broken by department it looks like it’s the other way around

Not the admission that makes it look this way but it depends on where women/men tend to apply and such

For example engineering vs english vs other

Causal Models

Causal ineference can be used to guide design of studies

Choosing which variables to include, which to exclude, which to hold constant

Serve as mechanism to incorporate scientific domain knowledge and exchange plausible conclusions

Structural Causal Models

A sequence of assignments for generating a joint distribution from independent noise models

By executing the sequence of assignments, we build a set of jointly distributed random variables

Provides a joint distribution and describes how the dist can be generated from elementary noise variables

A Probabilistic model

Different set of operations

Useful for considering hypothetical scenarios differently than if you have a single set of data

How we can intervene in a world AND measure the effect of that intervention

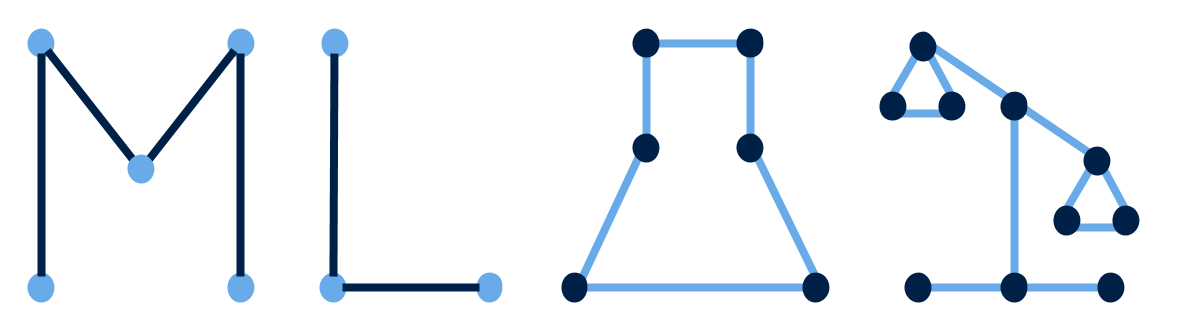

Causal Graphs

Parent notation we saw last week, interpreted a little differently and we can take a model to build a graph

Can go from graph to model too

Looking at directed graphs as placeholder for an unspecified structuarl causal model that has the assignment structure given by the graph

Graph Structure

Forks

Has outgoing edges to two other variables

Aka the most common cause of other variables

Mediators

A case of a fork where x causes z and z causes y so x causes y multiple ways

Colliders

Not confounders

X and Y are unconfounded, and we can replace do-statements by conditional probabilities

The Harvard Admission example

The story

Asian American Male 25% admission chance, as white 36%, as Hispanic 77%, as African American 95%

Invalid statistically because everything on the application is exactly the same except for race but typically many other things will change besides just the race

Empirical Comparison#

Presented by Chan

Main Goal

Metaanalysis paper of analyzing fair machine learning algorithms

Comparison of algorithms they’d found

Problem

Fairness

Comes with sensitive data

Age, race, gender, etc

The Experiemnts

Testing on

Preprocessing

Comes in 2 ways before feeding into the algorithms that may or may not preprocess further

Training data is the cause of discrimination motivates this

If training data is discriminating then the results definitely will be, so we can preprocess to make it more fair

Modifications to the algorithms

Postprocessing

If we have a result, can we prune or round items for better results

The Results

Measures of discrimination correlate with each other

Algorhtms make different fairness accuracy tradeoffs

Algorithms are gragile: they are sensitive to variations in the input

Datasets

Ricci

Determining if firefighters would receive a promotion

Adult Income

Predicting income above or below $50k

German

Classifying people on good or bad credit risk

ProPublica Recidivism/Violent Recidivism

Committing a crime/violent again

Preprocessing

Modifying input according to any data-specific needs

Removing unneeded features, imputing missing data, etc

Add a combined senstivie attribute i.e. “White-Woman”

Senstiive Attribute treated as binary

Analysis

See Figure 2 in the paper

One dot is one fold (LOOCV) of the data score in whatever metric in one algorithm

Equality between preprocessing choices = Showing the plot is not square, where the x and y axis are equal

Binary sensitive attribute is more accurate when using a fair classifier

Things we knew about ML before don’t necessarily apply to fair classifiers

Fairness may limit our accuracy