MLSS#

2022-03-21#

Lead Scribe Chamudi

The Indian Buffet Process: An Introduction and Review#

Presented by Surbhi

The Indian buffet process is a stochastic process defining a probability distribution over equiva- lence classes of sparse binary matrices with a finite number of rows and infinite number of columns.

unsupervised learning aims to recover the latent structure responsible for generating observed data

key problems faced by unsupervised learning

determining the amount of latent struture(no of clusters,dimensions or variables)

Problem assumes that there is a single, finite dimensional representation that correctly characterizes the properties of the observed object.

An alternative is to assume that the amount of latent structure is potentially unbounded, and the observed objects only manifest a sparse subset of those classes or features.

This paper

summerizes the extension of nonparametric approach to models in which objects are presented using an unknown number of latent features.

how the indian buffet process can be used to specify prior distributions in latent feature models, using a simple linear-Gaussian model to show how such models can be defined and used.

Latent Class Models#

LCM relates a set of observed(Usually discrete) multivariate variables to a set of latent variables

Finite Mixture Model#

A finite mixture model(FMM) is a statistical model that assumes the presence of unobserved groups, called latent classes, within an overall population. Each latent class can be fit with its own regression model, which may have a linear or generalized linear response function.

assumes that the assignment of an object to a class of is independent of the assignments of all other objects.

if there are K classes

Infinite Mixture Models#

defining an infinite mixture model means that we want to specify the probability of X in terms of infinitely many classes, modifying Equation 1 to become

The Chinese Restaurant Process#

Named based upon a metaphor

The objects are customers in a restaurant, and the classes are the tables at which they sit.

If we assume an ordering on our N objects, then we can assign them to classes sequentially using the method specified by the CRP, letting objects play the role of customers and classes play the role of tables. The ith object would be assigned to the kth class with probability

Latent Feature Models#

Each object is represented by a vector of latent feature values fi, and the properties xi are generated from a distribution dertermined by those latent feature values.

Latent feature values can be continuous, as in factor analysis and probabilistic principal component analysis or discrete, as in cooperative vector quantization.

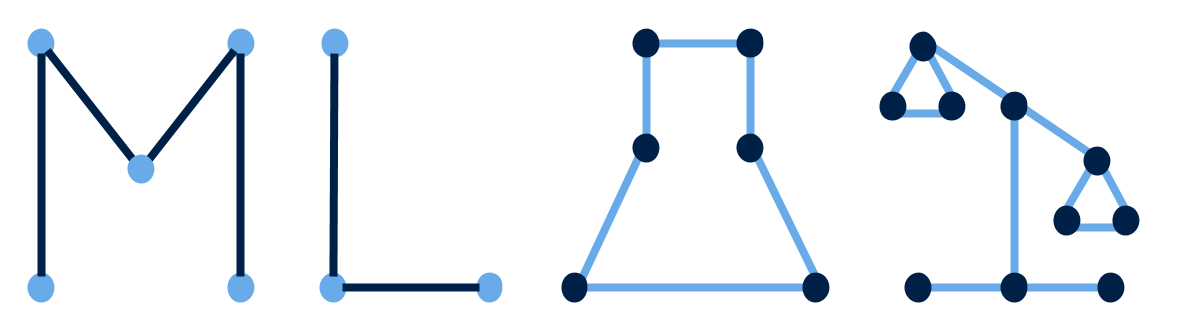

Feature matrices. A binary matrix Z, as shown in a, can be used as the basis for sparse infinite latent feature models, indicating which features take non-zero values. Elementwise multiplication of Z by a matrix V of continuous values gives a representation like that shown in b. If V contains discrete values, we obtain a representation like that shown in c.

The indian Buffet Process#

Define a distribution over infinite binary matrices by specifying a procedure by which customers (objects) choose dishes (features).

Indian buffet process (IBP), N customers enter a restaurant one after another.

Each customer encounters a buffet consisting of infinitely many dishes arranged in a line.

The first customer starts at the left of the buffet and takes a serving from each dish, stopping after Poisson(α) number of dishes as his plate becomes overburdened.

The ith customer moves along the buffet, sampling dishes in proportion to their popularity, serving himself with probability mk/i, where mk is the number of previous customers who have sampled a dish. Having reached the end of all previous sampled dishes, the ith customer then tries a Poisson(α/i) number of new dishes.

We can indicate which customers chose which dishes using a binary matrix Z with N rows and infinitely many columns, where zik = 1 if the ith customer sampled the kth dish.

Auto-Encoding Variational Bayes#

Presented by Chan

Generative model#

Ml model that learns a given learning data and geneates similar data according to the distribution of train data

Explicit density

Tractable density and approximate density

Normally requires high mathematical knowledge to understand

Manifold Hypothesis

The type of directed graphical model under consideration. Solid lines denote the generative model pθ(z)pθ(x|z), dashed lines denote the variational approximation qφ(z|x) to the intractable posterior pθ(z|x). The variational parameters φ are learned jointly with the generative model parameters θ.

Methodology#

Maximumlikelihood : 𝑎𝑟𝑔max𝜃[𝑝𝜃(𝑥) = ∫z 𝑝𝜃 (𝑥,𝑧) = ∫z 𝑝𝜃 (𝑥|𝑧) 𝑝𝜃(𝑧)]

Variation Inference

q𝜙(z|x) ≈ pθ(z|x)

The variational bound#

∫z q(𝑧|𝑥) = 1

p(𝑥) = p(z,x)/p(z|x)

𝔼[X] = ∫ 𝑥𝑓(𝑥)

DKL(P||Q) = ∫p(𝑥)𝑙𝑜𝑔( p(x) / q(x))

Evidence Lower Bound#

Stochastic Gradient Variational Bayes(SGVB)#

where z(l) ∼ qφ(z|x(i))

when both the prior p0(z) = N (0, I and the posterior approximation qφ(z|x) are Gaussian and j is the dimensionality of z

Reparameterization Tricks#

z(l) ∼ qφ(z|x(i)) cannot differentiate

Solution