Missing data Intro#

Lead Scribe: Surbhi Rathore

Opening Notes#

Handling missing data.

Paper - Handling Missing Values when Applying Classification Models#

paper – Presented by Emmely

Analysis over different treatments of missing values at prediction time.#

Alternative courses of action when features are missing:

Discard instances: Simply discarding instances with missing values.

Acquire missing values: In practice, a missing value may be obtainable by incurring a cost, such as the cost of performing a diagnostic test or the cost of acquiring consumer data from a third party.

Imputation : The main idea of imputation is that if an important feature is missing for a particular instance, it can be estimated from the data that are present.

(Predictive) Value Imputation (PVI): Value imputation estimates a value to be used by the model in place of the missing feature. Value imputation is more common in the statistics community

Distribution-based Imputation (DBI): Distribution-based imputation estimates the conditional distribution of the missing value, and predictions will be based on this estimated distribution. distribution-based imputation is the basis for the most popular treatment used by the (non-Bayesian) machine learning community

Unique-value imputation: Rather than estimating an unknown feature value it is possible to replace each missing value with an arbitrary unique value.

Reduced-feature Models: Reduced-feature models : We refer to these models as reduced-feature models, as they are induced using only a subset of the features that are available for the training data. Reduced-feature models can be computationally expensive.

Missing Completely At Random (MCAR) : refers to the scenario where missingness of feature values is independent of the feature values (observed or not). (missing values have nothing to to do with feature values)

Comparison of PVI, DBI and Reduced Modeling#

Reduced-feature modeling performs consistently outperforms the other two method.

LOW and HIGH FEATURE IMPUTABILITY Result#

PVI is better for higher feature imputability, and DBI is better for lower feature imputability. Value imputation generally preferable for high feature imputability, and DBI generally better for low feature imputability.

REDUCED-FEATURE MODELING SHOULD HAVE ADVANTAGES ALL ALONG THE IMPUTABILITY SPECTRUM#

Reduced modeling is a lower-dimensional learning problem than the modeling to which imputation methods are applied, it will tend to have lower variance and thereby may exhibit lower generalization error.

Evaluation with “Naturally Occurring” Missing Values#

By “naturally occurring,” we mean that these are data sets from real classification problems, where the missingness is due to processes of the domain outside the control.

Conclusions#

Reduced-feature models are preferable both to distribution-based imputation and to predictive value imputation.Reduced models undertake a lower-variance learning task, and do not fall prey to certain pathologies. Predictive value imputation and DBI are easy to apply, but one almost always pays—sometimes dearly—with suboptimal accuracy.

Paper - Missing data imputation using statistical and machine learning methods in a real breast cancer problem#

paper – Presented by Chan

The ‘‘El Alamo-I’’ breast cancer dataset#

one of the largest databases on breast cancer in Spain.

The missing data represent 5:61% of the overall data set.

Statistical methods#

The statistical imputation methods include mean imputation, hot-deck, and MI methods based on regression and the expectation maximisation (EM) algorithm.

Mean Imputation: is a simple application of regression imputation, the mean value of each non-missing variable is used to fill in missing values for all observations.

Hot-deck imputation : nearest neighbour hot-deck imputation is applied, where a nonrespondent is assigned the value of the nearest neighbour record according to a similarity criterion.

Multiple imputation : , MI replaces an unknown value with a set of plausible data and uses an appropriate model that incorporates random variation. MI has several desirable features:

(1) an appropriate random error is introduced into the imputation process to obtain approximately unbiased estimates of all parameters;

(2) good estimates of standard errors are obtained from repeated imputation; and

(3) MI can be used for any kind of data and any kind of analysis without specialised software.

Machine learning methods#

Imputation methods based on machine learning are sophisticated procedures that generally consist of creating a predictive model to estimate values that will substitute the missing items.

These approaches model the missing data estimation based on information available in the data set.

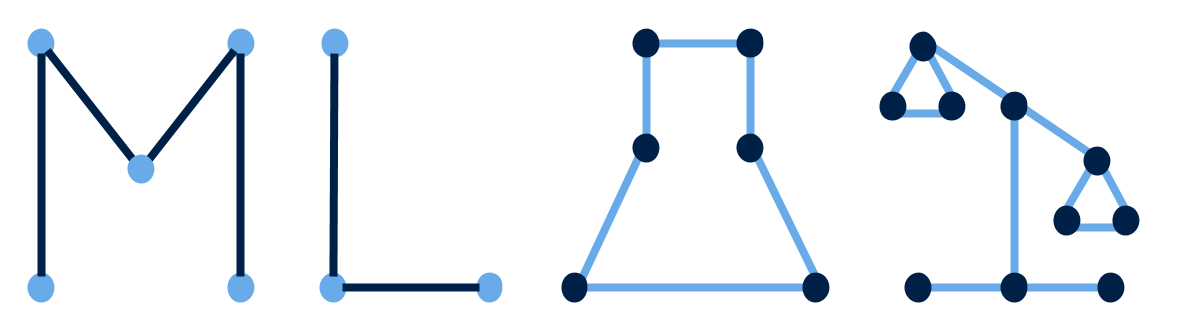

Three well-known imputation techniques using machine learning approaches: MLP, SOM and KNN.

Multi-layer perceptron :

An MLP consists of multiple layers of computational units interconnected in a feed-forward way.

Each unit in one layer is directly connected to the neurons of the subsequent layer.

A fully connected, two-layered MLP architecture was used and sigmoidal activation functions.

MLP networks can be used to estimate missing values by training an MLP to learn the incomplete features (used as outputs),using the remaining complete features as inputs.

Self-organisation maps :

An SOM is a neural network model made out of a set of nodes (or neurons) that are organised on a 2D grid and fully connected to the input layer.

The training of a basic SOM is performed using an iterative process. After the weight vectors are initialised, they are updated using all input training vectors.

After the SOM model has been trained, it can be used to estimate missing values.

K-nearest neighbours :

the KNN imputation algorithm uses only similar cases with the incomplete pattern.

Given an incomplete pattern x, this method selects the K closest cases that are not missing values in the attributes to be imputed (i.e., features with missing values in x), such that they minimise some distance measure.

The optimal value of K is usually chosen by crossvalidation.

Calculation of replacement value depends on the type of data;

example, the mode is selected for discrete data (categorical data),

while the mean is used for numerical data (continuous data).

The ANN prognosis model :

numerical simulations are performed on the data imputed by the methods described above on neural networks comprising a single hidden layer with the number of neurons between 2 and 50.

Model evaluation#

The accuracy of the prognosis models is evaluated by testing two main properties:

discrimination and calibration. Discrimination is the ability to separate patients with and without a relapse event.

Calibration is the ability to correctly estimate the risk or probability of a future event.

Conclusions#

machine learning techniques may be the best approach to imputing missing values, as they led to statistically significant improvements in prediction accuracy. Imputation techniques depend on the available data and the prediction model used. Also, these results might not generalise to different data sets

closing#

Volunteers for paper presentation: Chamudi & Lily