Scientific Method & Philosphy of ML#

Lead Scribe: Derek Jacobs

admin#

grading contract will be posted in time for Wednesday

notes & posting example

can leave out admin in notes; that material wil mostly be other places

Opening Notes#

set the stage for how we think about other work and setting up your projects

Scientific Method in the Science of Machine Learning#

Introduction#

ML is having a hard time explaining some results

Replications are failing

Scientific Method#

Starting from the assumption that there exists accessible ground truth, the scientific method is a systematic framework for experimentation that allows researchers to make objective statements about phenomena and gain knowledge of the fundamental workings of a system under investigation.

—Jessica Zosa Forde and Michela Pagnini

process and a social contract

ML might not be systematic

Control the randomness of our algorithms

Procedures/Trust allow comparisons of results

assumes a ground truth

Things can go wrong if there simply isn’t a ground truth

Especially when using ML in social context

Hypotheses

A formed “guess”

In CS, the learning process is as follows

Here’s a problem (that has an answer)

Write code to solve it to specifications

Hypotheses can be falsified through statistical and other analysis

You can prove the opposite is not true, but challenging to prove truth

you can express hypotheses as priors, but that prior wouldnt be the same in the rest of ML lit

Discuss

When might this not apply?

At the base of scientific research lies the notion that an experimental outcome is a random variable, and that appropriate statistical machinery must be employed to estimate the properties of its distribution.

Treat your accuracies as a random variable as part of an experiment and you’re experimenting around those

In stats people chase having p values <= 0.05 and so we have a reproducibility crisis

-

< 40% replicated the original results

there are some problems with NHST but there are alternatives that are still rigorous

-

Bayesian

Priors allowed

More broadly defined

Everything we do is influenced by our thoughts and so there’s some subjectivity

Interpreting results in terms of previous results

Frequentist

No Priors

Probabilities strictly refer to events like fair coin flips (100 flips we expect 50 heads 50 tails)

Knowledge does not accrue

Case study#

HEP:

proposed thoery

null hypothesis

cafeull accounting

model building and hypothesis testing phases

parametric models derived from first prcinicples

statistical test is constructed

ML Analogy:

suspect new activation

formulate quantitative hypothesis

How will it work, what’ll it do, how much will it improve

And behavior of how it’ll change

run experiments

Record outcomes from base models (no intervention)

This is a statistical model of your experiment, not an actual model

Dataset, optimizer, init, hyperparameters, etc are just noise in the question of “does the activation function work”

Or make things specific (improve results with a specific dataset)

Recommendations#

1. formulate hypotheses first 2. statistical testing 3. operate in controlled, reproducible, and verifiable settings 4. negative result workshops - pregistration workshop at neurips 2021 not an author as speaker - If your experimental plan is good, results are published regardless of positive or negative case - new journal

Value Laden Shifts in ML#

paper @brownsarahm reminder from lily talk on incorporating ethics into teaching data structs - environmental cost of algorithms

Looking at how different values influence what is being done in ML

disciplinary shifts are not objective, but value laden

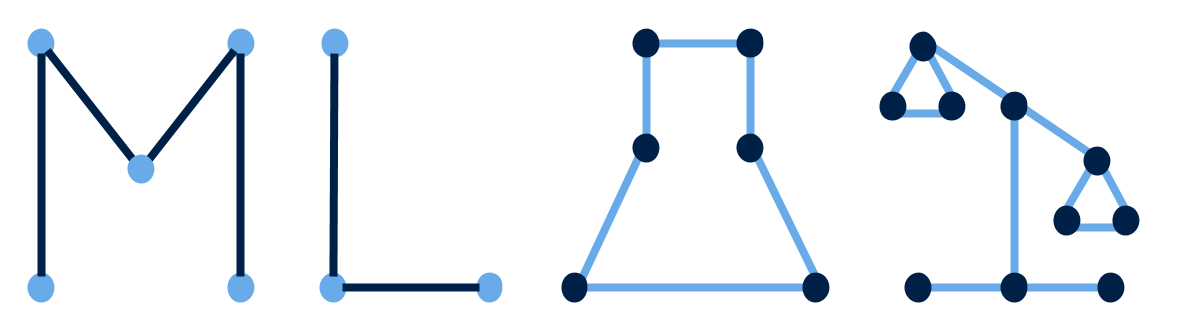

Model Types

Different categories of models typically used for specific purposes

The structure of what our learning algorithm outputs

e.g. CNN for image processing, Linear Models, SVMs, etc

Model types as organizing#

many researchers self-organize in types; eg for revieing, workshops etc

How do we organize them in a package like sklearn, but also “who knows who works on this”

commitment is fueled by exemplars

has down stream effects:

guide research agenda and problem selection

How model types influence problem selection

Different types are tailored to different problems

Most popular model means most funding

More funding on specific models drives improvements to that area specifically and other problems get lost

constrain search for solutions

prerequisites, eg deep learning and data volume (fig 1) and compute power (fig2)

fig1

Depending on data amount, we may favor one model over the other, and that in turn is researched more

fig2

Shows over time how my computer power is used in training AI

Recently exponential growth

model types have parallels but important differences in philosophical scientific organzing principles

decreases theory development

Kuhn

The organizing paradigm defines what questions are valid

For example

If we only have earth water fire ether, we can’t ask what molecules do

When committed to model types…

The whole industry shifts towards it

Like NVidia GPUs, computer architectures, etc

Model type is self-reinforcing#

comparing them is influenced by the model type points above (problems, prerequisites)

Comparison between types is value laden#

Applied to ImageNet

Benchmark image classification problem

Until 2011, best error rate was 25% (no deep learning)

2012 - AlexNet reached 16% (deep learning)

By 2016 ImageNet is basicaclly “done” due to all extremely high accuracies (97% vs 97.1%)

This doesn’t mean the problem of image classification is solved

prerequisites

compute

Who has access

data

Who has access

What sets are created/curated

Evaluation criteria

in theories: as a whole, internal consistent, predictive, etc

Evaluating theories based on their theoretical virtues is a value-laden activity when theoretical virtues are carriers of values.

—Milli and Dotan

eg consistency requires keeping the bad from the old in new

eg: metrics and discrimination

Something that was sexist previously is still now

Conclusion#

A related question inspired by these issues is who should make decisions in what values are furthered? Who gets to have a voice? In talking about selection of problems in science, Kitcher (2011) argues that all sides should have a say, including laypersons [71]. A question for machine learning is: is the same true for machine learning? Who should have a say about which criteria are important in evaluating model-types? That is itself another value-laden question.

Overall Paper thoughts#

Discuss

General thoughts?

Think about things that’re interesting, confusing, etc (for future reference)

Take care when conducting ML

Feed in data, hope for the best without considering the “why’s”

Discuss

How is this different from how you’ve thougth about CS before?

There’s more to consider before actually coding

Vast social influences in things as simple as “can i even get this dataset”

We focus on what works now instead of what hasn’t worked

Discuss

How might the value-laden points about theory development relate the the scientific method points

Two pillars of hypothesis + statistical testing

Meta points#

(science) scientific mehtod one is a workshop paper (approx length of your project papers)

this is a position paper (it’s not about new experimental results as much as the classic research arguing a position apper)

next class#

volunteer: Emmely

[Roles for computing in social change](https://dl.acm.org/doi/abs/10.1145/3351095.3372871

See course site for notes on expectations during presentations