Fairness#

Lead Scribe: Chan

FAIRNESS AND MACHINE LEARNING#

Chapter 2: Classification#

Presented by Damon

Main Goal#

determine plausible value for an unknown varible Y given an obscent variable X

Essentially trying to predict something based on other factors

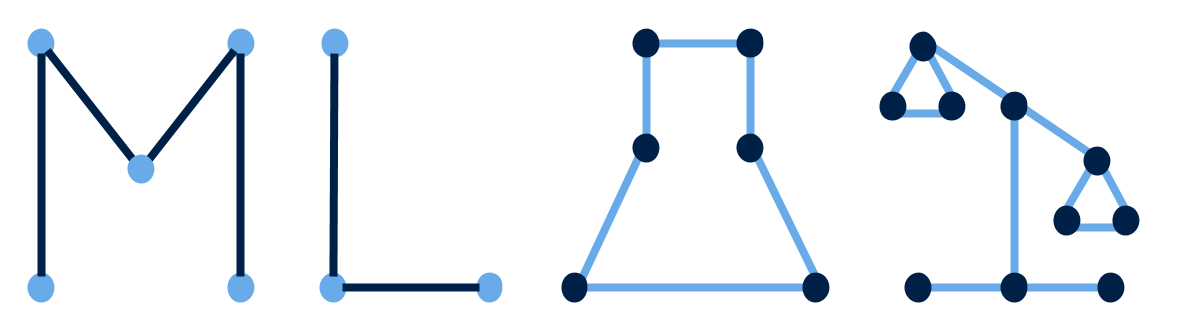

Supervised learning#

The prevalent method for onstructing classifeirs from observed data

Goal: to identify menatingful patterns

Classifier: a mapping from the space of possible values for X to the space of values that the target Y can assume.

The essential idea is simple in that we will have albeld ata in the form (x1, y1) that is drawn from a distribtuion where x1 is an instance and y1 is the label.

The instance are then partitioned into positive

Lables typically come from a discrete set such as (-1, 1) in the case of binary classification.

Statistical Classification Criteria#

How to identify which classifer is best fo your purpose

Accuracy: the probability of correctly predicting the target variable

Cond. probability table

The true positivie rate corresponds to the frequency of the classifer correctly assinging a positive label to a positive instance.

Why do we need the weighted average?

P(Y=Ŷ) = P(Ŷ=1|Y=1)

P(Y=1)+ P(Ŷ=0|Y=0)P(Y=0)to get all of the accuracy, we need to multiple the weights to our conditional probabilities so that it is computable.

The Conditional Expectation#

Score: a single real-valued variable summairzed from a regression modelA natural score function is the expectation of the target variable Y conditional on the features X we have observed

Sensitive Characteristics (A)#

In many classification tasks, the features X contain or implicitly encode sensitive characteristics of an individual

It is dangerous to ignore these factors, which will make prediction difficult by tampering the correlation.

Indepdence – R⏊A#

Requires the sentitive charactieric to be statistically independent of the score

Definition 1: The random variable (A, R) satisfy independence if A⏊RIndependence simplifies to the condition:

P{R=1∣A=a}=P{R=1∣A=b}

Where R=1 is acceptance and the condition requires the acceptance rate to be the same in all groups.

There coudl also be a relaxation on the constraint that would introduce a positive amount of slack ε>- and require that:

P{R=1∣A=a}≥P{R=1∣A=b} − ϵ= ϵ ≥ P{R=1∣A=b} - P{R=1∣A=a}, where we want the difference to be small.

Separation#

Acknowledges that in many scenarios, the sensitive characteristic may be correlated with the target variable.

This separation criterion allows corelation between the score and the senstitive attribute to the extent that it is justified by the target variable

Definition 2: Random variables (R,A,Y) satisfy separation if R⏊A|YIn the case where R is a binary classifier, separation is equivalent to requiring for all groups a, b the two constraints:

P{R=1∣Y=1,A=a}=P{R=1∣Y=1,A=b}

P{R=1∣Y=0,A=a}=P{R=1∣Y=0,A=b}

Separation requires that all groups experience the same false negative rate and the same false positive rate.

Sufficiency – Y⏊A|R#

Formalizes that the score already includes the sensitvie characteristic for the purpose of predicting the target

Definition 3: We say that random variables (R, A, Y) satistfy sufficienty if Y⏊A|RIn this case, a random variable R is sufficient for A iff for all groups a, b and all values r in the support of R, we have

When R only has 2 values we recognize this condition as requiring a parity of pos./neg. predictive values across all groups.

Calibration and Sufficiency#

It is sometimes desirable to be able to interpret the values of the score functions as probabilities

This condition means that the set of all instances assigned a score value r has an r fraction of positive instances among them.

Calibration by Group#

To formalize the connection between sufficiency and calibration we say that the score R satisfies calibration by group if it satisfies:

Proposition 1. If a score R satisfies sufficiency, then there exists a function 𝓵: [0,1] --> [0,1] so that 𝓵(R) satisfies calibration by group.

Relationships Between Criteria#

Initial criteria constrains the joint distribution in non-trivial ways therefore we can suspect that imposing any 2 of them simultaneously over-contrains the space to the point where only degenerate solutions remain.

Happy path of establishing multiple fairnesses is intangible.

Machine Bias#

Presented by Derek

The premise#

Brisha Borden & a friend - 18 years old

Scooter

Vernon Prater - “Seasoned”

Shoplifting

Both similar crimes

Computer program predicting likelihood of recidivism

Borden > Prater

Two years later, opposite held true

Risk Assessments#

Becoming increasingly more popular in courtrooms

9 states provide scores to judges during sentencing

Following the Warnings of AG Holder#

The sentencing commission did not launch a study of risk scores

ProPublica did launch a study

>7000 people arrested in Broward Cty, FL

Check how many were charged with new crimes over 2 years

Results

Unreliable

Biased

In Theory#

Risk scores are great

High scores mean more likely to commit crime

Vice versa for low scores

Simple…?

In Practice#

People are extremely complex

Countless factors go into recidivism such as

employment

housing status

fiancial situations

As a result unless we individualize the risk assessment, result will be biased

The Problem#

people tend to blindly trust tech.

any jurisdictions adopt Northpointe’s software without testing

Using tech is the easy way out

“Easy to use and gives ‘simple/effective’ charts for judicial review”

2009 study reported a 68% accruacy rate for the scoring software

Overall#

Using tech in social context is extremely complex

Poeple are unique and countless factors go into individual behavior

With recidivism specificialy

Black people more likely to be predicted higher than White people

Software relied upon too much

Studies have concluded risk scores do not reflect reality.

Gender Shades#

Presented by Derek

Intro#

AI is widely used in society

Facial recognitiion software can realistically be used to identify suspects

Algo. trained with biased data result in algorithmic discrimination

This work focuses on facial recognition to gender classification

Intersectional Benchmark#

Gender classification means we need defined classes

The dataset should contain varied phsyical attributes for subgroup accuracy analysis

Pilot Parliaments Benchmarks dataset

high quality photos

reliable sources

Commercial Gender Classification Audit#

Classifiers perform best on lighter, male faces

Classifiers perform worst on darker female faces

Microsoft, IBM, Face++ analyzed

Max disparity in error rate between best/worst classified groups is 34.4%

Dissecting racial bias in an algorithm used to manage the health of populations#

Presented by Derek

Black people had to have more chronic conditions to have a higher risk score

Training used total medical expenditure.

Could have used total sickness as a factor to train to avoid bias.