2022-03-02#

Lead Scibe: Derek

Multi Objective in Data Mining#

Presented by Chamudi

Intro#

Model Quality represented by an n-dimensional vector

n is the num of qual criteria to be optimized

2 scenarios

Predictive tasks

Classificaiton, regression, etc

Which model is better

Easily interpretable dozen node decision tree with 92% test accuracy or non-interpretable hundred node decision tree with 95%

The answer depends on the problem at hand and what the user prefers

May be after just accuracy or you may want to better understand what’s going on

One may overfit/underfit, etc

Alex’s MNIST example → for another class accuracy was the end goal so the non-interpretable would be the better move in that instance

If a bank uses it, making mistakes may cost some money, but non-interpretability may cause a lawsuit

Attribute Selection

Select a subset of attributes that are relevent for the target data mining task

Maximize accuracy of the model, and minimize the number of selected attributes

Trade offs

Subset A is more accurate than B, but B has fewer attributes

Three Approaches for Coping with Multi Objective Problems#

Transforming multi-objective to single-objective

Assigning a weight to each objective and combining values of weighted criteria into single value by adding or multiplying all the weightred criteria

Q = w1*c1 + w2*c2 + … + wn*cn or

Q = c1W1 * c2W2 * … * cnWn

A couple scnearios

Rule induction algorithms for classification

Attribute selection algorithms for classification

Arguments For

Simplicity

Easy to use

Arguments Against

Setting of weights is ad-hoc

Each weight is “magic”, justified vaguely

Hard for user to define the best setting of weights a priori without knowing results of the research

But you could basically brute force it and pick the best weights

Mixing different units of measurement

Model quality criterias have different scales in their units of measurement

Can be fixed with normalization but only to a certain extent

Mixing apples/oranges

Non commensurable criteria should not be added together

For example 50k salary + 5 dependents

Lexicographic Approach

Assign different priorities to different objectives then optimize each in order of priority

Usually compare performance measure for the highest priority objective unless one is significantly better than the other

Arguments For

Recognizing non commensurability of different quality data

Avoids the 3 drawbacks of weighted formula approach

Simpler and easier than Pareto

Arguments Against

Introducing a new Ad-Hox parameter

Requires one to specify a tolerance threshold for each criterion

Pareto Approach

Use multi objective algorithm to solve the original multi objective problem

Pareto Dominance

s1 Dominates s2 iff s1 is strictly better than s2 w.r.t. at least one of the objectives being optimized and s1 is not worse than s2 w.r.t. all criteria being optimized

theres one ci s.t. s1(ci) > s1(ci) and

for all ci, i=1…k, s1(ci)>=s2(ci)

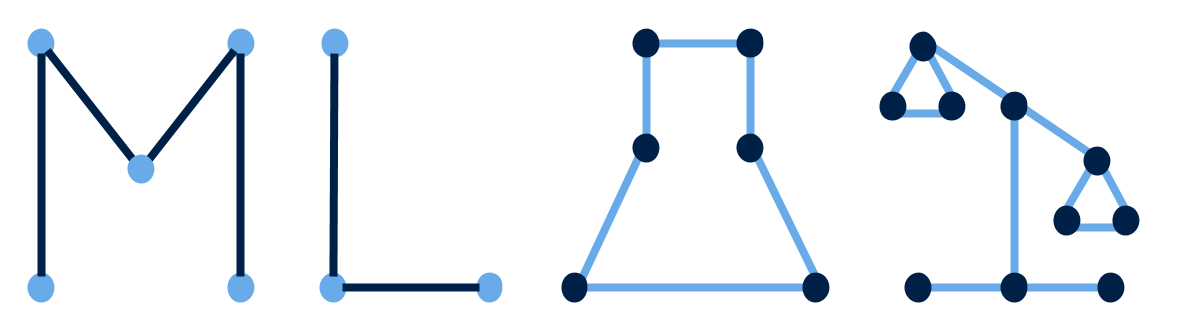

See figure 1 of paper

Pareto vs Single Objective

Multi objective retures a set of non dominated solutions rather than a single solution

Search by multi objective should explore wider area of the search space and track non dominated solutions found to find as many solutions as possible

Much more complex than single objective

Arguments For

Multiple runs of a single-objective optimization algorithm is inefficient and ineffective

Minimum description length principle comes with a price

How to encode hypothesis and its data exceptions into bits of info

Arguments Against

Multiple runs of a single-objective optimization algorithm seems “enough”

Minimum description length principle seems “enough”

Moral of the story: Pareto and Lexicographic are the way to go for multi objective