MLSS#

2022-03-28#

Lead Scribe - Chan

A Survey on Semi-Supervised Learning#

Presented by Derek

Semi-supervised learning

In this survey

attempting to give a comprehensive oversew of the state of semi-supervised learning

new taxonomy for semi-supervised classfication methods

Background

Assumptoions SSL

Underlying marginal data distribution (p(x) over the input space contains info about the posterior distribution p(y|x)

Multiple assumptions based on interaction of p(x) and p(y|x)

assumption –> how does p(x) tells us something about p(y|x)?

not time-series data

points are not related to each other

samples are not conditional to each other.

classes are seperable

Smoothness

For two input points x,x’ that are close in the input space, the labels y,y’ should be the same

Low-Density

implies the decision bounday of a classifer should pass through low density regions in the input space

where few points are observed

smoothness assumption is not violated

but if bounday is in high density, could violate the smoothness assumption

Manifold

manihold hypothesis - data lives in a lower dimension than we expect.

Connection to Clustering

cluster assumption is often included

When does SSL work?

algorithm needs to be able to extract the info relating p(x) to p(y|x) [hard to answer]

Empirical Eval of SSL

Many decision influence the relative perforamce of algorithms

Data set partitioning, hyperparameter tuning

With SSL we also have

what should be labeled and what should not be

can choose to eval performance on unlabeled data or on a disjoint set.

Toy data sets are usually used to show biability of a new approach

Data set choice can have large impact on performance.

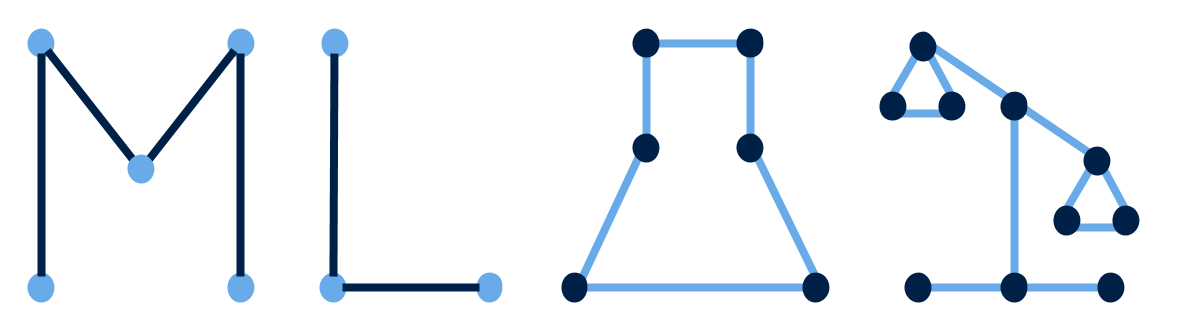

Taxonomy

Inductive Methods

wrapper methods

train classifers on labeled data –> use predictions to generate additional labeled data -> retrain on the pseudo-labeled data in addtion to existing labeled data.

among the oldest and most widely known algorithms for SSL

Advantage: can be used with ~any supervised base learner

Broken into self training, co-training, and pseudo-labelled boosting methods.

self training

most basic pseudo labelling approach

single supervised classifier iteratively trained on both labelled data and data that’s been pseudo labelled

Many design decisions

Selection of data to pseudo label

Re-use of pseudo labelled data

Stopping criteria

co-training

2 or more supervised classifiers that are iteratively trained on the labelled data, adding most confident predictions to the labeled set of the other supervised classifier at each iteration.

important that base learners are not strongly correlated in their predictions (classifier diversity)

single view and multi view

co-regularization

Boosting

bagging

each base learner is given a set of i data points, which are sampled uniformly at random with replacement from the original data set

boosting

each base learner is dependent on previous base learners

SSMBoost

AdaBoost exteneded to SSL setting

ASSEMBLE

gets rid of base learners

SemiBoost

Relies on the manifold assumption, using principles from graph-based methods

Other SSL Boosting Methods

RegBoost

unsupervised preprocessing

Uses unlabelled and labelled data in two separate stages

Feature extraction

Attempts to find a transformation of input data such that performance of the classifier improves or such that its construction becomes computationally more efficient

Many feature extraction methods operate without supervision (like PCA), others operate on labelled data and extract features with high predictive power

Relates to autoencoding (like we saw last week)

Cluster-then-label

Form a group of methods that join clustering and classification processes

First apply an unsupervised or semi-supervised clustering algorithm to all data, then uses resulting clusters to guide classification

Pre-Training

Unlabelled data used to guide decision boundary towards potentially interesting regions before applying supervised learning

intrinsically semi-supervised methods

Don’t rely on intermediate steps or supervised base learners

Extensions of existing supervised methods to include unlabeled samples in the objective function

Maximum-margin methods

SVMs

Semi Supervised SVMs

Gaussian Processes

Density Regularization

Pseudo-labeling as a form of margin maximization

Perturbation-based methods (PB)

when we perturb a data point with some noise, the predictions for noisy and clean inputs should be similar

smoothness assumption

Methods of incorporating smoothness assumption into a given learning algorithm

Apply noise to input data and incorporate the difference between clean and noisy into the loss function

Implicitly apply noise to data points by perturbing the classifier

Semi-Supervised Neural Networks

Simplicity and efficiency of back propagation makes it easy to add an unsupervised component to the loss function

Because NNs are hierarchical, they are also viable candidates for semi-supervised approaches

Ladder networks

Extends a feedforward network to incorporate unlabelled data by using the feedforward part of the network as the encoder of a denoising autoencoder

Adding a decoder, and including a term in the cost function to penalize the reconstruction cost

Pseudo-ensembles

Perturbing the neural network model itself

Robustness through penalizing the differences between the activations of perturbed network and those of the original network for the same input

Unperturbed parent model is perturbed to obtain one or more child models

⨅ models

Computing perturbed models directly

Using dropout and penalizing differences in the final layer activation of the networks using squared loss

Mean teacher

Average weights = teacher

latest model = student

Temporal Ensembling

virtual adversarial training

semi-supervised mixup

Manifolds

an m-dim manifold is a subspace of the original input

Manifold regularization

Introduce regularization term that captures the fact that manifolds represent lower dimension Euclidean space

Manifold approximation

Manifold explicitly approximated, then used in a classification task

Generative models

model that process the generation of data

Mixture models

When prior knowledge about p(x,y) is available, GM can be strong

Data is composed of k Gaussian distributions → fix model as a mixture of k gaussian components

Generative adversarial networks

Variational autoencoders

Transductive Methods

do not construct a classifer for entire input space

Limted to exactly those objects encountered during training phase.

info must be propagated through direct connections between data points (graph-based approaches)

cannot distinguish between training and testing phase

General Framework for graph based method

graph construction

creation

weighing

Most important aspect of graph-based methods

Form edges between nods (adj. matrix)

attaching weights to them (weight matrix)

Adj. matrix construction

KNN, ε-neighborhood, b-matching

connects nodes to all nodes to which distance is at most ε

find subsets of edges in complete graph such that each each node has degree b and the sum of edge weights is maxed.

Graph weighting

Simultaneous graph

Inference

forming predictions for the unlabelled data points

Hard label assignments: graph min-cut

Probabilistic label assignments: Markov random fields

Efficient Probabilistic label assignments: Gaussian random fields

like MRFs

Handling label noise and irregular graphs

approach that addresses two issues

handling label noise

influence of nodes with high degree in irregular graphs is large

Further research on graph based inference

absorption

class imbalance sensitivity

Scalable transductive learning

Classification in network data

represented as graphs

Learning with Noisy Labels#

Presented by Derek

Intro

design supervised learning algo. learning from noisy labels

approaches

modified/Proxy loss is an unbiased estimate of the loss function

Based on the idea that the probability of misclassification under noisy distribution differes from clean distribution where a given threshold is used to decide the label.

Problem Setup and Background

Ỹ–> incorrect data

not every single label is wrong (<1)

𝑓 is a decision function

important quantities associated with 𝑓

l is a convex function calibrated to a loss

In the event 𝑓 is not quantified in a minimization, the minimization is overall measurable functions.

Methods of Unbiased Estimators

Method of Label-Dependent Costs

Proposed Proxy Surrogate Losses

Given a surrogate loss function and this decomposition

Conclusions

Proposed methods are competitive and able to tolerate moderate-high noise

Methods can benefit from knowing the noise rates

Algorithms give a new family of methods applicable to positive unbalanced learning problems